Artificial intelligence (AI) is expected to significantly disrupt the workplace, and in particular, to change how employers make decisions about hiring, firing, and promotions. According to one prediction, up to 40% of employers will use AI to interview job candidates instead of conducting in-person interviews by the end of 2024. Unions such as the Writers Guild of America (WGA), the Communications Workers of America (CWA), and the AFL-CIO have all sought to protect their membership from the harmful effects of AI’s disruptions in the workplace. Labor scholars such as Annette Bernhardt, Sarah Hinkley, and Lisa Kreisge at the UC Berkeley Labor Center and Brishen Rogers have also provided important analyses of AI’s impact on workers and worker power. Other civil rights, non-profit advocacy organizations, and academic commentators have raised concerns that employers and law enforcement can deploy AI to discriminate against historically marginalized groups without proper guardrails. They are not alone. Several companies have asked to be regulated in light of discrimination concerns. The Equal Employment Opportunity Commission (EEOC) has issued extensive technical assistance to help organizations comply with our country’s civil rights laws and prevent discrimination on the basis of race, religion, and gender.

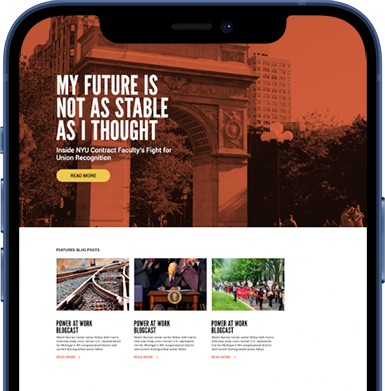

All of these organizations are right to raise these concerns. But there is an unspoken discrimination in hiring that is buried deep within the psyche of most employers in the United States that the current literature is curiously silent about: discrimination against union activists or those whom predictive analytics indicate are likely to become union activists. I want to use this blog post to break that silence.

Photo from Freepik

There can be one of two possible reasons for the silence I am describing above. The first and most obvious one is that the National Labor Relations Board (NLRB) has long held that employers who discriminate against an applicant in order to encourage, or discourage, membership in any labor organization violate Section 8(a)(3) of the National Labor Relations Act (“the Act”). The Supreme Court has also held that union organizers who also work for an employer as a “salt,” or embedded organizer, are “employees” who receive the protection of the Act. The silence could simply be a result of the fact that the law is indisputably settled. Employers have adjusted their conduct so as to not violate the law. However, as numerous labor scholars, such as Kate Brofenbrenner, have argued, the Act’s weak penalties provide an incentive for anti-union employers to take their chances and they have done so. Remember, even politically progressive employers have vehemently resisted union organizing drives. Many of these institutions have incorporated the anti-discrimination values underlying many of our canonical civil rights laws in the form of adopting diversity, equity, and inclusion (DEI) initiatives, but resist unionization because collective bargaining shifts some power over corporate affairs away from management.

With this in mind, the other possible reason for this silence is that most vendors are operating and coding against a Western Capitalist economic system that favors corporate interests, and by extension management interests, over the interest of workers. Remember, AI decision-making processes remain opaque to both programmers and AI ethicists. Due to this bias and opacity, an AI programmer can design a hiring program that helps select conformist candidates who will not disrupt the workplace without ever entering the magic word “union.” Creative coding that avoids demonstrating intentional discrimination against likely union supporters could get around the NLRB’s case law. That is because the NLRB has not adopted a “disparate impact” theory of discrimination when it comes to enforcing 8(a)(3) protection of salts. According to the Cornell Legal Institute’s Wex dictionary “[a] disparate impact policy or rule is one that seems neutral but has a negative impact on a specific protected class of persons.” In other words, American workers seeking to organize have a difficult challenge to show that an employer has discriminated against them using AI. If a worker files an unfair labor practice charge and the NLRB decides to bring a complaint, then the Board’s litigators will have to seek discovery into the programming that went into an AI algorithm for signs of anti-union bias supported by direct evidence of anti-union bias.

It is also worth noting that, even if the Board adopted a disparate impact theory, and the Board’s litigators were able to bring a successful charge against an employer, the Board’s remedies are feckless, as Seth Harris and Diana Reddy have described them on this blog. They pale in comparison to the remedies in the EU AI Act Art. 5(g) and Art. 99 which attach significant monetary damages for using AI to determine trade union membership.

Photo from Freepik

Pursuing a claim of AI-powered discrimination successfully through the NLRB adjudicative process would certainly challenge any attorney, but the exercise is a worthwhile one if the current NLRB’s General Counsel’s (GC) is looking for novel enforcement actions that implicate emerging technologies. The General Counsel indicated that this is one of her goals in GC Memo 23-02. While that memo outlines the GC’s enforcement priorities for algorithmic tools that management could use to engage in surveillance, hiring managers could just as easily use the same tools to discriminate against union activists, perhaps without even knowing that is what they are doing or meaning to do it.

To paraphrase Isaiah 55:8-9 in the Christian Bible, the thoughts and ways of generalized AI programs and the business rhetoric on which it is trained are not the ways of labor. The generalized AI applications most users will use are trained on a generalized knowledge base that is publicly available. Labor’s decline in density to 10% of the workforce, when coupled with the sheer numerosity of academic, trade, and press materials produced by pro-business organizations, including many that have nothing to do with labor, means that generalized AI applications will lean toward providing outcomes that are most popular and profit maximizing — that is, pro-business information. As Lauren Lefler states in the Scientific American, “[t]he key to developing flexible machine-learning models that are capable of reasoning like people do may not be feeding them oodles of training data. Instead, a new study suggests, it might come down to how they are trained.” Right now, generalized AI is being fed a high volume diet made up of very few pro-union nutrients. No union that I am aware of has created its own pre-trained transformer (the PT in Chat GPT). That means the AI market is loaded with pro-business materials that do not have a strong functional counterweight raised on the values of worker solidarity that unions promote.

This blog post is simply an attempt to raise an issue that may have implications for both organized labor and unorganized labor. I hope that more scholars examine how employers’ unconscious biases can filter into AI and impact the hiring not only of the historically marginalized groups that our country’s civil rights laws protect, but also workers who are unorganized in the United States. That scholarship could hold an important key to meeting the NLRA’s purpose of encouraging union organizing and collective bargaining.

Alvin Velazquez is a Visiting Associate Professor at the Indiana University Maurer School of Law.